Back in the 2010s, the “after hours” boasting among big corporates tended to revolve around the size, maturity and number of data lakes that your enterprise had created. The “infra” gurus littered their dialogue with mentions of nodes, clusters and the “H-word” (Hadoop).

Riding on this mania were the big data platform enablers, HortonWorks, Cloudera, and MapR. These were heady days when the C-suite listened to pitches from their CTOs, tried to digest the eye-watering budgets required, and dreamt of the promised data nirvana.

In the 2020s, a more sombre mood prevails. Some rather expensive lessons have been learnt. The objectives and outcomes that revolved around the data lake are more realistic.

But where did things go wrong? And how are those who were spectators then, while the pioneers were busy investing and experimenting, now adapting their data strategy to avoid the same pitfalls?

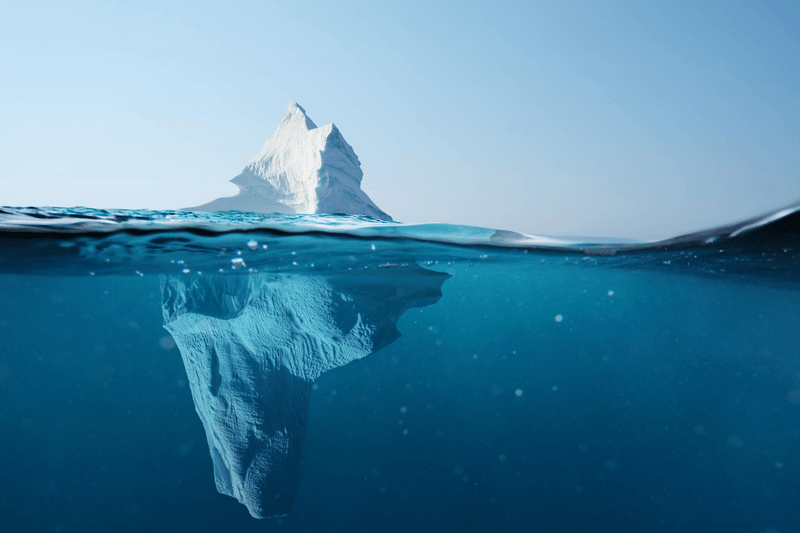

The data lake was certainly mis-sold and misrepresented. It was promoted as an enterprise-wide solution to the data and analytics puzzle, which exaggerated its benefit. The executives who championed its creation had lofty ambitions of aggregating a corporation’s legacy data into one vessel, and making it available and valuable to all functions.

Better data governance

Data lakes are usually created to expedite access to data, swap out existing data warehousing, or set up a lab environment for data scientists and engineers. The hope is that

a variety of processing capability and malleable data storage will allow for better data governance, especially around retention, quality and meta data. At the start of this project journey, most believed in a one-stop destination for an entire enterprise’s data and analytics.

This wishful thinking can only become reality if certain characteristics exist at the enterprise. There need to be sensible security and governance policies around datasets for each infrastructure cluster. Personnel who are intended as users of this data container must be sophisticated enough to extract and apply a variety of disparate and crude data, that generally finds its way into this store without being groomed, classified and structured.

Anyone entering the fray now should have narrower and more specific ambitions that revolve around the requirements of users, an analytics use case, or a business unit. Data lake deployment should not be mistaken for a data strategy on its own. It is just a piece of an enterprise’s infrastructure. Once it is established, well governed and of use, it can be aimed at helping to achieve the objectives of stakeholders with specific desired outcomes.

Some enterprises approached the challenge aiming to create an endless, ever-growing lake where data retains its value and end state forever. This misguided view means data storage is always increasing, and retention and data optimization are obsolete. The lake must somehow keep scaling.

This also underestimates the currency of data and the higher value of new data as a business develops. The over-reliance on old data might produce the wrong results and insights. The burden from retaining all the meta data for this approach is enormous.

The infinite-scale approach does have cost implications, even when this data is hosted in the public cloud. Where an on-premise deployment using Hadoop is utilized, these costs are significant. Hadoop cluster nodes demand processing, memory and storage.

“Operating in a global financial services firm, it can be very challenging to identify all of the data points and engage internally so that you can pull all the data onto a platform you can then use. It is usually not the case that you have all this data already coming in from your other programs.”

Steve Livermore, independent consultant, Livermore Management Compliance Consultancy

Management needs to understand that more data does not necessarily create a bigger asset, especially if this data is no longer relevant and instructive to where a business is going. This can be avoided with a strict retention policy that ensures the data in the lake is fresh and useful. Inadequate data can also be rejected if it does not meet certain data quality standards.

An enterprise data lake approach has its own pitfalls. The objective is to amalgamate all current data silos in one container as part of physical infrastructure. Then the data is conceptually available to everyone, in different functions, and is a “one-stop shop” for the enterprise’s analytics capability.

There are multiple bear traps to sidestep. All the right data has to make its way into the lake. Easier said than done.

Some parts of the business might not understand how to get their data in or get the right guidance on doing it, while others might not execute or even want to include their data. Sometimes the sheer variety of data types and their origin may make inclusion a huge challenge because of an inconsistent data and meta data model.

Cloud-based data storage is not well set up to respect and employ the precise data governance approaches that many organizations apply to their data at inception. This is even more dysfunctional when data from different regions are taken into account. The priority is often processing speed and for this to work, the collateral damage is the inability to recognize the context of the data.

Establish objectives

These approaches and their adoption can be encumbered further by a lack of clear strategy from the heads of data management. They may prioritize data lake creation without any established objective or governing principles.

There is an overriding need to examine who will use the data, to what end, and the data universe that must be captured to make that happen. A data lake can be a substantial piece of what is required to deliver the right strategy, but it cannot be the start point, the end point, or indeed the strategy itself.

It bears repeating that once different functions have been persuaded to put their data in the lake, they need the wherewithal to be able to analyze it effectively. The data still needs management and curation in order for the value, and its context, to be extracted.

Steve Livermore is an independent consultant at Livermore Management Compliance Consultancy, and has a number of tales of derring do (with scars to prove it), from his experiences leading the compliance technology and surveillance teams of Tier 1 global investment banks.

“I was a surveillance head who took a different approach to creating data lakes,’ he says. “I created a holistic data lake model without having the benefit of all my data in one place. I created what is best described as “data puddles” that would pull together elements of data that we felt were correlated, and we would use to create a broader alerting capability than that provided by the vertical silos of trade and communications surveillance. We then started to bring in textual and behavioral data and created new alerts from those, which was extremely effective.”

“People have now realized they need the data strategy first, as well as the value and relevance of good, clean data.”

Steve Livermore, independent consultant, Livermore Management Compliance Consultancy

“But as for strategy, having a data lake, and having one you run analytics on, are very different things. Let’s assume a data lake comes with cloud, machine learning and neural networks etcetera. If you pull all your data together and have a clear vision of what the data will ultimately do once it is centralized, you are in a position to start to apply advanced analytics technologies. Operating in a global financial services firm, it can be very challenging to identify all of the data points and engage internally so that you can pull all the data onto a platform you can then use. It is usually not the case that you have all this data already coming in from your other programs.

“A lot of firms have been doing a ‘root and branch’ data exercise to determine if they are seeing everything they should be seeing, and in a format so that it could be used. People have now realized they need the data strategy first, as well as the value and relevance of good, clean data. In addition to a strategy and governance framework, is the need to ask what you want to do with that data.

“Finally you have this big lake of data, cleaned, normalized and available so you can interrogate it. That is a huge tick in the box as that is no small task. But while you have been doing that, you have not created any value as such on the output side. This has all been preparatory. While you build, you are not doing much if anything with the data.

“You need a ‘top-of-the-house’ view of how you will build your data model. It needs to be something that comes out of the CEO’s office and is run at that level. From the bottom up does not work as everyone fights for their right to get the data to work explicitly for them.

Processing data takes time

“If you are using machine learning, and that machine is trained by me all the time, I get consistent output but it will be skewed by me as an individual telling the machine what I want to find and deltas of that. If you and I are training the machine, there is a less one-eyed view, but there needs to be a very clear strategy on what you are collectively trying to achieve. If your outcomes are at divergence or the way you train the machine is divergent, problems begin to appear. You might look at the suggested outputs and decline some as no longer of interest, so the machine gets confused very quickly and the alerts become extremely ineffective.

“With a broader set of data you can approach it and use it in different ways with interesting new outputs but this is expensive and slow. You need many stakeholders coming together often for a common goal. It involves a lot of design, development and build with no visible benefit until tomorrow or next year when it is finally ready. But if it is done properly, it does give a real competitive advantage to those using that approach.”

We also spoke to the Global Head of Surveillance at a North American investment bank to get another view.

“Data lakes are certainly not dead though the first incarnations were not done in the most effective way – there has been a trend in the last two years for a move away from creating a lake and putting everything in it. Many have made a completely new start where the front office system that stores the data sets the schema. They pick the biggest one in the product class that the bank is trying to ‘data lake’ and present the 50 fields that are available. They specify the formats that everything needs to be in, and which ones are critical.

“The data transformation element is enormous. The processing to put it into the schema takes several hours to run overnight. This is something that the industry will struggle with over the next five to 10 years.”

The Global Head of Surveillance at a North American investment bank

“I don’t think there was a focus previously on the critical data element and it did not always involve the key users. Often the front office would be the only ones consulted and they would not worry about what other functions might need. Now people know they must have conversations about the primary needs to outline the elements for every product class. That gets bolted together with the schema that prevails to offer fields for everyone such as trade time, order time and so on that populate every system.

“Then you can layer in the additional fields – for example there is a huge difference in data recorded for an interest rate swap as for an equity trade. Those fields need to be harmonized where possible and if not, they need to be clearly split with a method to reference between them as you add another data source.

“We are making significant progress in fixed income surveillance as our data comes from those lakes. It is working and is effective. Another good test run is before lake creation, creating the tactical files that will feed those systems. So those tactical files get transitioned into a strategic solution that creates the lake rather than creating the lake and then providing the feeds. Feeds first, then do all the fixes and the work in the feeds, and then come back into that strategic solution.

“The data transformation element is enormous. The processing to put it into the schema takes several hours to run overnight as a lot needs to be changed and collated. I still think this is something that the industry will struggle with over the next five to ten years. There has never really been a push for this sort of globalized source. Everyone thought that transaction reporting would be the answer as it all has to be collected so we can all use it. Unfortunately it has not worked out like that. So people are taking their own course.

“The development and build is funded centrally but the ongoing usage and additional developments you require are funded based on universal need. If the need is unique to you, you cover it yourself.”