New York is the latest state to have passed a law implementing broad safety regulations that target AI’s more advanced models.

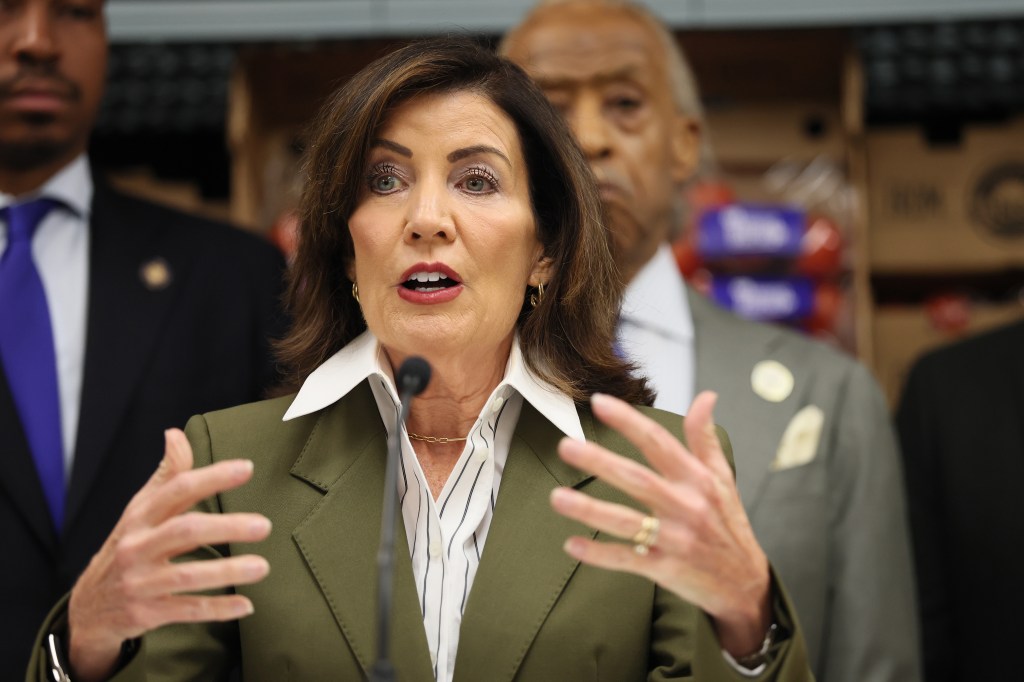

Governor Kathy Hochul signed the Responsible AI Safety and Education Act (RAISE Act) into law last week. The new rule tracks closely with elements of California’s recently enacted Transparency in Frontier Artificial Intelligence Act.

Hochul brought up the western state’s similar law and the need for state action in this arena by saying: “This law builds on California’s recently adopted framework, creating a unified benchmark among the country’s leading tech states as the federal government lags behind, failing to implement common-sense regulations that protect the public.”

The new law sets the governor up against President Donald Trump, who just issued an executive order this month challenging US states’ ability to regulate the technology. Specifically, the order argues that “[US-based] AI companies must be free to innovate without cumbersome regulation. But excessive State regulation thwarts this imperative.”

His order mandates the creation of the AI Litigation Task Force to challenge any state AI laws that interfere with any federal laws or “unconstitutionally regulate interstate commerce.”

A national standard is yet to come as lawmakers grapple with a few contender bills, but President Trump said in the order that this forthcoming US framework “should ensure that children are protected, censorship is prevented, copyrights are respected, and communities are safeguarded.”

Deeper dive

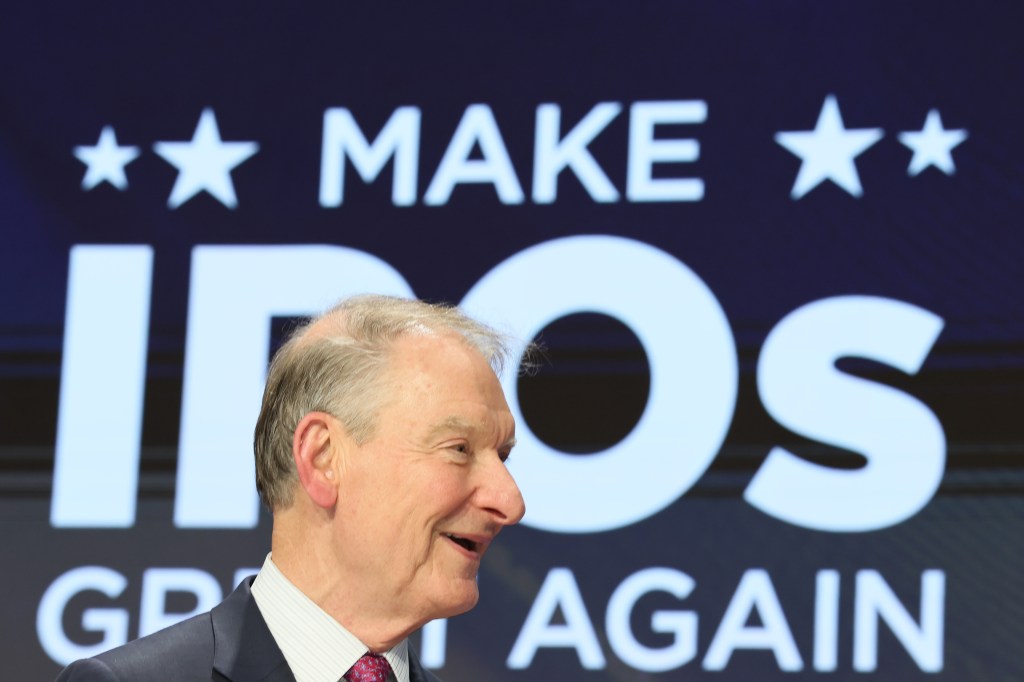

As state senator Andrew Gounardes, who was a co-sponsor of the bill, describes it, the RAISE Act “requires large frontier AI developers to write, implement, publish, and comply with plans that describe in detail how they handle various safety standards and best practices, including how they assess the safety risks of their models; apply and review risk-mitigation techniques; use third parties to assess catastrophic risk potential; implement cybersecurity to prevent model theft; identify and respond to safety incidents; and institute frameworks to ensure best practices are followed.”

Developers are mandated to review their plans every year and publish a justification for any changes in 30 days’ time. The Act also requires developers to select a senior personnel member to be responsible for ensuring compliance.

The law expands access to critical safety incident reports and implements a 72-hour reporting requirement for AI model safety incidents, which the law cites (by way of example) as frontier models autonomously engaging in behavior outside of what a user requested; critical failure of technical controls; or theft, malicious use, or unauthorized access to the model.

Developers who fail to submit required RAISE Act reporting or make false statements could face civil penalties of up to $1m for a first violation and up to $3m for subsequent violations, the law notes.

The RAISE Act also creates a new office that is funded by fees on developers themselves and will live within the New York State Department of Financial Services to enforce the Act, handle rules and regulations, evaluate fees, and publish a yearly report on AI safety.

Differing views

Two of the large developers that would be included here are OpenAI and Anthropic, and both expressed support for the RAISE Act, with the companies being quoted as saying that AI legislation in two large state economies is good for the innovation landscape overall.

“While we continue to believe a single national safety standard for frontier AI models established by federal legislation remains the best way to protect people and support innovation, the combination of the Empire State with the Golden State is a big step in the right direction,” OpenAI chief global affairs officer Chris Lehane told The New York Times.

“This is an enormous win for the safety of our communities, the growth of our economy, and the future of our society,” said Gounardes. “The RAISE Act lays the groundwork for a world where AI innovation makes life better instead of putting it at risk. Big tech oligarchs think it’s fine to put their profits ahead of our safety – we disagree. With this law, we make clear that tech innovation and safety don’t have to be at odds. In New York, we can lead in both,” he said.

Lobbying groups representing tech companies were critical at the outset of the legislation as it worked its way through the state legislature.

Leaders of the bipartisan super PAC Leading the Future called an earlier version of the bill, in a statement to CNBC, a “clear example of the patchwork, uninformed, and bureaucratic state laws that would slow American progress and open the door for China to win the global race for AI leadership.”