Data centers are a huge part of the discussion of artificial intelligence (AI), with questions revolving around efficiency, data protection, and energy consumption. These elements and more combine to pose a risk-management challenge to organizations.

How do you lower your risk in this arena? For a start, by correctly identifying risk and then fashioning appropriate strategies to reduce it.

CEO and founder of MiraNu.

Photo: Private

Basically, de-risking a data center means implementing a comprehensive approach to identifying and mitigating internal and external threats to its security, operations, and supply chain.

There are many layers to this strategy, involving aspects of monitoring, machine learning, cybersecurity, infrastructure security, regulatory tracking, supply-chain management, climate assessments, and any tools that provide a better line of sight into the risk profile generally.

Using AI to do the de-risking

A specific type of risk-management business has sprung up, offering businesses a way to de-risk their data centers by de-risking their decision-making.

To help explain the process, GRIP spoke to Jelena Savic-Brkic, founder and CEO of Toronto-based AI-enabled risk management solution MiraNu, which was named one of the top 100 AI startup firms at the recent All In AI conference Montreal (more details about the event below).

“We help de-risk your data centers through processes and providing the real-time information decision-makers need when building and maintaining these centers. It’s a comprehensive service done in real time with the help of AI,” she said.

Savic-Brkic noted that many businesses (and their executives) wanted to get in the game of owning a data center, but had little to no idea how, and were as a result running around as if with scissors in their hands.

“These are smart people, but they often focus on the lowest-hanging fruit in terms of risk and will fail to see some risk factors. That’s where we come in.”

One type of risk is how quickly whatever you put into that building becomes outdated. As you roll out new technology, there is a need for new cybersecurity protocols and compliance strategies, she said.

Using “slow AI” that is responsible as it moves, as Savic-Brkic put it, the idea is to embed subject-matter expertise into machine learning and quantify risks. This can entail several steps.

Some of the steps in de-risking

When your whole operation is based around the remote storage, processing, or distribution of data, certain processes and safeguards need to be considered and a risk framework fashioned to enable them. To de-risk the endeavor, here are a few considerations Savic-Brkic recommends:

- Making your data sources communicate with each other better, having a more unified intelligence, and spotting bottlenecks that can arise – such as with your power agreements and power supply, or when you need a new vendor. Getting that whole-portfolio view helps the really large “hyperscalers” better communicate internally with their various departments and office locations. It creates a value chain.

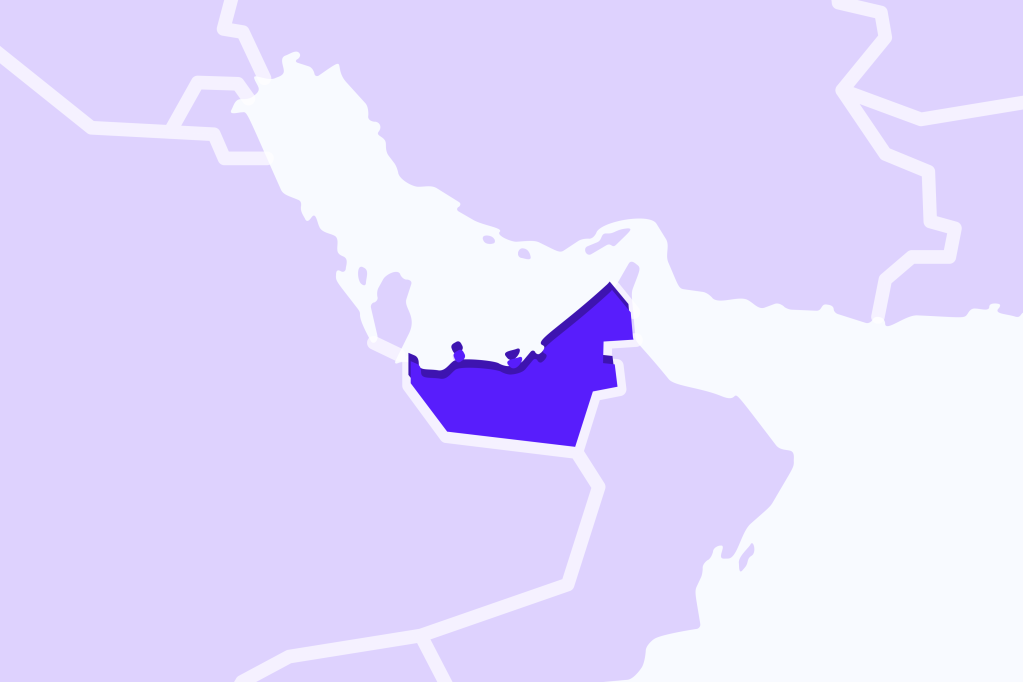

- Managing the real estate that is a data center poses risk. It’s critical to understand the regulatory obligations in this context and the risks specific to the contractual arrangements of building and owning them – of knowing what the critical differences are between owning one in New York City and owning one in Tokyo.

- Managing the real estate also entails getting insights and assistance with insurance rates and terms in specific areas to appreciate the risk differences involved (not just property insurance, but also cargo and supply-chain or cyber-risk insurance), and the litigation risks that come with owning any real estate.

- Evolving risk needs to be considered too, which could be the effect of tariffs on the resources needed to maintain your data center. Whether that is high risk now or medium risk that could become high risk, it needs to be flagged and tracked. Export control risks play a role too, as you don’t want to later learn you bought components sourced from China that ran afoul of export control regulations that are always in flux.

- De-risking also means spotting opportunities, such as identifying places where you can build something that someone has half-built or abandoned, or acquiring something that another party wants to offload.

- Appreciating state nuances in every respect, from the actual climate differences themselves, to varying regional sustainability commitments, to the laws and regulations pertaining to land and power usage. Plus, are there enough vendors to meet your needs in that location?

- Selecting the right vendor is always a risk, so getting as much information from one or more providers that can offer this due diligence is important, and machine learning can help with narrowing down the ones equipped for your requirements and certified to do the work needed.

- Having a party examine whether an existing vendor is the best option versus others can save costs down the road, since vendors increasingly are becoming as specialized as the data centers themselves, and an AI tool can help benchmark what quality standards they need to meet to suit your needs.

- Future issues to track include discarded equipment ending up in landfills, creating yet another environmental issue to be solved. These are ones for commercial and public/government enterprises to consider and possibly work on jointly. The US Department of Energy offers incentives to businesses to re-use infrastructure at retired coal facilities for data centers and associated power infrastructure and helping to commercialize enabling technologies it has developed. These include next-generation geothermal, advanced nuclear, long-duration storage, and efficient semiconductor technologies. It also offers tools to support data center owners and operators, utilities, and regulators, as do a bevy of service providers.

You don’t know what you don’t know

The biggest obstacle that can trip you up, Savic-Brkic said, is thinking you have all the answers and being complacent with the knowledge and line of sight you bring to your operation.

This can be even more problematic for those with technology skills, because the risk-spotting and solving can seem as straightforward as the math and coding they perform.

Or they think what worked with one data center will replicate in full to another one. “Improving processes depends on having more insight into your mistakes and blind spots, which we all have,” she said.

And people need to give themselves time to learn about how to operate and manage a data center. Having AI tools built on years of research and subject-matter expertise could be a good start, and the right risk-advisory partner would bring those to help you navigate complex risks through each phase of the data center project life cycle.

In keeping with learning more, Savic-Brkic recommends a report from Aon that outlines what effective risk-management solutions look like in practice. This quote from it stands out: “Data centers are built based on historical weather data. However, climate change invalidates this assumption, increasing risks like severe droughts and extreme heat.”

The AI energy conundrum

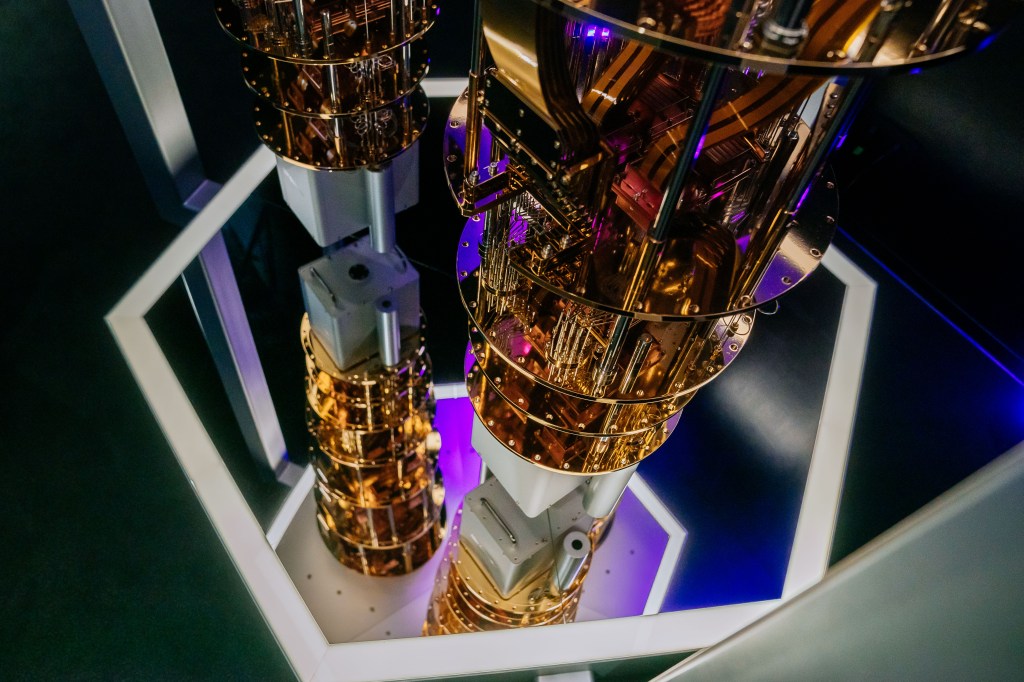

As the use of information technology grows, use of data center and server energy is expected to rise as well.

Data centers are one of the most energy-intensive kinds of building, using 10 to 50 times the energy per floor space of a typical commercial office building.

A 2024 Department of Energy report found that data centers used about 4.4% of total US electricity in 2023 and are expected to use around 6.7% to 12% of total US electricity by 2028. The report indicates that total data center electricity usage rose from 58 terawatt-hours (TWh) in 2014 (TWh is a unit of energy equivalent to one trillion watt-hours) to 176 TWh in 2023, and estimates a hike between 325 to 580 TWh by 2028.

Cooling accounts for about 40% of a data center’s energy use, making it a focal point in the discussions of managing the energy consumption demand and general environmental sustainability.

Another challenge: States with significant data center infrastructure, such as Virginia and Texas, are experiencing accelerated energy demand, making the impact of data center power consumption not only increase but also be unevenly distributed.

And this: We should be innovating with AI to fight the effects of climate change as well as make our energy creation and energy use more efficient and sustainable over time. But the very tools used to create and custody their data elements are straining our worldwide grids. It’s a contradiction that is being worked on, thankfully.

More on AI: The All In event

GRIP is featuring a range of articles from the All In Conference in Montreal – an event where government officials, developers, academics, and business leaders examined the world’s AI future. Discussions covered a range of topics, from Canadian digital sovereignty and talent development to the design of important safeguards for privacy, fairness, and transparency around the tools.

Canada’s growth and achievements in AI was an area of particular attention, with attendees hearing about why investing in quality AI tools is worth every penny. Each speaker noted that global investment and collaboration are required to truly realize the benefits of AI.