From the earliest mainframes to the latest neural networks, technology has always offered the same seductive proposition – automate away human error, bias, and mischief, and you’ll end up with something cleaner, faster, and more reliable.

It’s an appealing vision for management teams and regulators alike, especially for lines of business where the stakes are high and the rules are complex, as is the case in finance. If we could only take the “human factor” out of risk management, the logic goes, we’d avoid the costly lapses that have plagued financial institutions for decades.

This month’s much-anticipated release of OpenAI’s GPT-5 is but the latest entry to this argument. Some will point to it as evidence that artificial intelligence will soon automate away much of human work. Whether that’s wishful thinking or an imminent reality, only time will tell. But in the meantime, we don’t have to speculate about what happens when firms deploy cutting-edge tech at scale. The evidence is in – and it’s not encouraging.

EBA’s reality check

Since the late 2010s, “RegTech” has offered the promise of delivering safety and soundness at greater scale and lower cost. Under its broad umbrella sit governance, risk, and compliance (GRC) platforms; advanced surveillance and monitoring tools; cyber-security defenses; and, increasingly, AI-driven analytics.

The theory behind this technology is compelling: automate repetitive tasks, process vast data sets in real time, and identify anomalies before they become breaches. The result, we’re told, will be stronger controls, fewer costly failures.

The villains aren’t the tools themselves but rather the cultures into which those tools are dropped.

But as the European Banking Authority’s (EBA) recent Fifth Opinion on Money Laundering and Terrorist Financing Risks makes clear, the results have been decidedly mixed. According to the EBA’s central EuReCA database, more than half of the financial institutions reporting on anti-money-laundering and counter-terrorist financing (AML/CFT) showed material weakness linked to the improper implementation and use of RegTech.

The villains aren’t the tools themselves but rather the cultures into which those tools are dropped. The reasons are depressingly familiar: inadequate in-house expertise, poor governance, insufficient oversight, and an over-reliance on off-the-shelf solutions not fit for purpose.

How we got here

For years, the supervisory model has focused on two familiar checkpoints:

- Inputs: Does the firm have the required systems, policies, and procedures in place?

- Outputs: Do performance metrics, regulatory reporting, and audit results suggest that those systems are working?

If both are “yes,” the box gets ticked – until it all blows up.

The EBA’s report reminds us that focusing solely on inputs and outputs misses the crucial middle ground: how work actually gets done. Between the inputs and the outputs lie the throughputs – the people, presumptions, and practices that determine whether any formal system will succeed in the messy reality of daily operations.

A great culture can overcome weak processes, but no amount of automation can make up for bad culture.

Unfortunately, this happens far too often in both firm governance and supervision. As long as, on paper at least, policies and processes are implemented, attention shifts to more “productive” opportunities. In practice, that leaves open the possibility that systems and processes are inconsistently applied, poorly understood, or even actively bypassed.

All too often, the only way to know that culture is having this effect is after a failure takes place, just as the EBA observed.

This problem has been with us for a long time. The difference now is that, as technology, and particularly that which is powered by AI, has become more capable, the temptation is to commit even more to automated systems and processes. While there will certainly be benefits from doing so, it will likewise give these weaknesses an even bigger stage and a faster amplifier.

Culture is the operating system

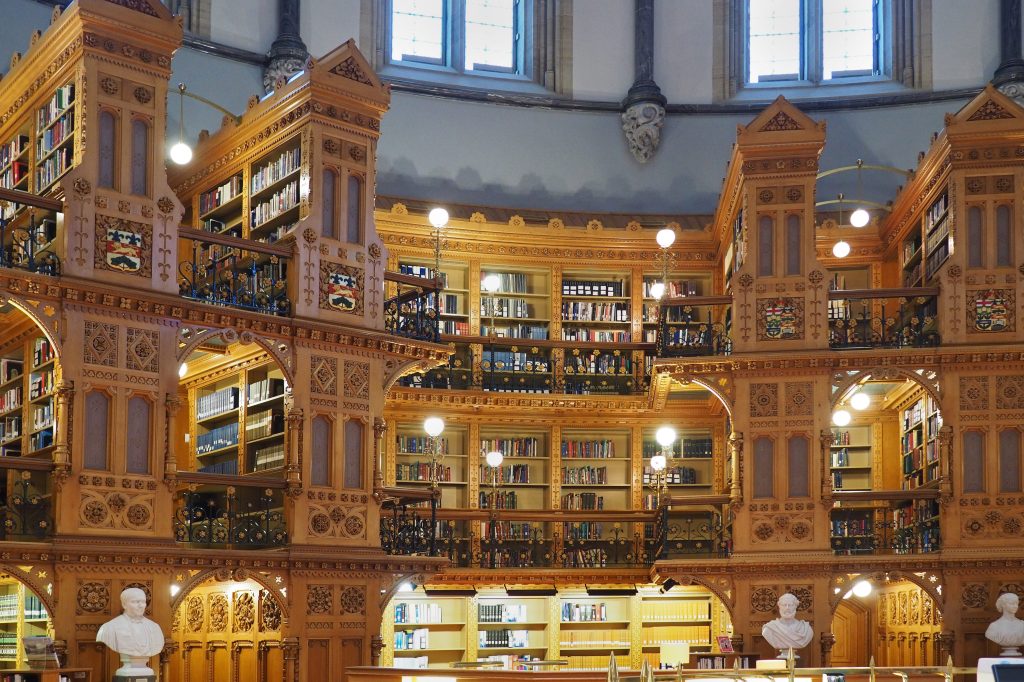

The EBA’s findings on RegTech deployment read like a textbook on throughput failure. Institutions may invest millions in advanced platforms, but without the right cultural scaffolding – governance that’s both informed and engaged, staff who are trained and empowered, and oversight that is curious rather than complacent – those tools become expensive décor.

This isn’t just an AML/CFT problem. We’ve seen the same dynamic in cyber-security (where breach after breach comes down to neglected patching or lax credential management) and in AI adoption (where models are deployed without sufficient understanding of their limitations, leading to bias, hallucination, or regulatory non-compliance). The EBA’s own report warns of AI being used to automate sophisticated fraud schemes and generate convincing deepfakes.

If billions in annual technology spend still leaves us exposed, it’s worth asking: Is the issue a lack of tools, or a lack of organizational capacity to use them effectively?

Why this matters now

Three features of the current environment make this a particularly urgent question:

1. Speed of innovation

FinTech growth shows no sign of slowing. The EBA notes that 70% of competent authorities see high or rising ML/TF risk in the sector, with compliance struggling to keep pace. As traditional institutions acquire FinTechs, these risks don’t stay contained – they spill into the wider financial ecosystem.

2. Concentration of risk

Many institutions rely on a handful of technology vendors. This creates systemic exposure. A single flawed update, design choice, or vulnerability can ripple across the sector, as we saw in last year’s CrowdStrike incident that grounded airlines and froze hospital systems.

3. Regulatory expectation shift

The EBA isn’t calling for less technology. Quite the opposite, it urges supervisors to identify and promote good practices, from dynamic risk profiling to efficient data management. But it also makes clear that governance, oversight, and human capability are inseparable from technical success.

Throughputs in practice

In our own work, we describe throughputs as the core of culture risk governance – the informal norms and routines that shape how formal policies are made manifest. When technology fails in a well-resourced institution, the cause is almost always traceable to one or more of these:

- People: Skills, incentives, peer norms, and capacity. Are teams equipped to use the tools as intended, and do they see value in doing so?

- Presumptions: Shared beliefs about what matters most. Is compliance seen as integral to performance, or as a box-ticking exercise to get auditors off the doorstep?

- Practices: The actual behaviors that fill the working day. Are exceptions investigated or waived through? Are alerts triaged thoughtfully or cleared en masse to hit volume targets?

The EBA’s data tells us that, in too many firms, these throughputs are either invisible to leadership and impossible to predict in advance, or they are treated as the fault of a handful of bad managers or employees. Neither is acceptable if we want technology investments to pay dividends.

Automation with purpose

If the decision to invest in systems is driven by the desire to placate supervisory requirements rather than through a thoughtful, governance-driven procurement process, the results would be pretty much what the EBA described. Systems are installed, but without effective resources and sufficient management attention, performance (outputs) is lacking. Firms suffer as well, as limited resources are diverted towards inefficient and poorly implemented systems.

In a more effective system that recognized the impact of organizational throughputs, examiners would devote less attention to reviewing policy documents and system inventories and more time to validating that the lived reality of employees in a firm comported with those formal structures.

Technology may change quickly, but organizational culture changes only when we make it a priority.

A great culture can overcome weak processes, but no amount of automation can make up for bad culture. Regulators need to assess the effectiveness of implementation, which shifts the discussion away from a tick-box approach to checking systems to a more productive one that ensures the right culture risk governance is in place.

This is even more important as AI becomes more sophisticated. Regulators can’t hope to be able to accurately assess potential technology risk or even whether technology-driven processes are sound. However, with proper training, frameworks, and tools, supervisors can assess whether a firm is approaching its technology implementations (as well as its other objectives) with sound culture risk governance.

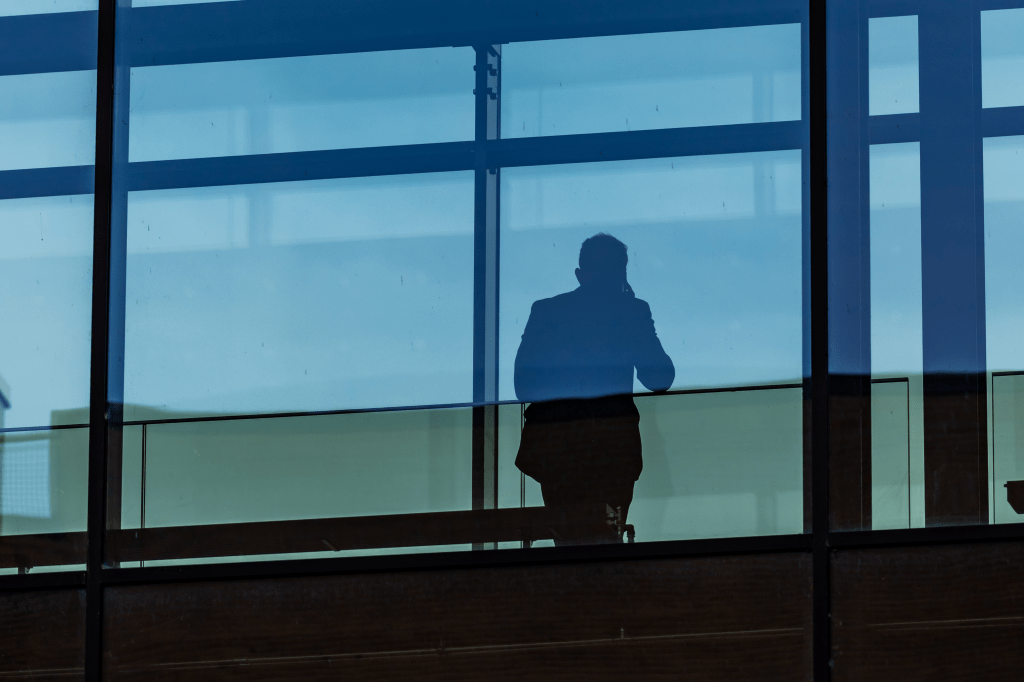

It means moving from a posture of “trust, but verify the technology” to one of “trust, but verify the practice.”

A leadership agenda

For boards and senior executives, the EBA’s message should be interpreted as an opportunity. If culture risk governance is the solution, then there are steps management teams can adopt:

1. Focus on technology governance vs technology implementation

Treat technology deployment not as a check-list of which tools and processes are in place, but rather as a holistic system of technology and people. Automation should be pursued, but only when management is confident the culture is capable, not as a means to paper over culture problems.

2. Map and measure throughputs

Move beyond inputs and outputs. New tools and frameworks are available that make it possible to generate more accurate metrics on key throughputs than ever before. Such metrics make it possible to anticipate culture-related governance failures before they take place. This is where latent risk and operational vulnerabilities hide.

3. Close the loop with supervisors

The EBA is calling for supervisors to promote good practice. Share what’s working, what’s not, and how you’re adapting. That conversation can shape regulatory expectations in ways that benefit both sides.

Closing thought

The dream of a compliance process that runs on autopilot is not going away – nor should it. But as the EBA’s latest report makes plain, even the most sophisticated RegTech cannot outrun the culture into which it’s deployed.

The ghost in the machine is us. And until we align our people, presumptions, and practices with the capabilities of our tools, we’ll keep learning the same lesson: technology may change quickly, but organizational culture changes only when we make it a priority.

Erich Hoefer, Co-Founder and COO of Starling.

Starling Insights publishes original research and covers events related to the governance and supervision of cultural, behavioral, and other non-financial risks and performance outcomes.